Estimated reading time 9 minutes, 45 seconds.

What if you could hold up an iPad to an aircraft and see the locations of all the previous repairs in an interactive 3D display? The technology foundation for that type of application might be here sooner than we thought, thanks to a recent Supercluster project led by Boeing’s Vancouver lab.

This particular project, called the Augmented Reality for Maintenance and Inspection project, is focused on “streamlining and improving” the critical aircraft inspection and maintenance processes. Currently, inspectors rely on written records and photographs of an aircraft, produced by previous inspectors. Mechanics and technicians often map damage and repairs manually, and information must be checked carefully to ensure location accuracy.

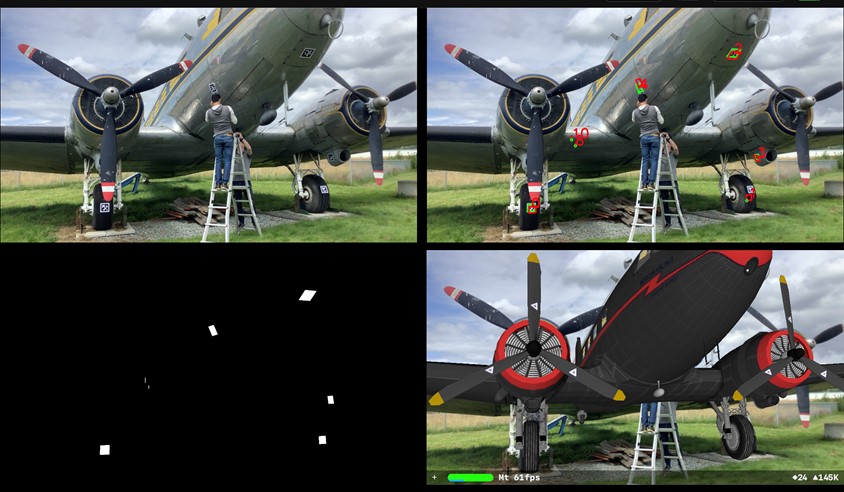

The idea behind the Supercluster project is to develop a “specialized” augmented reality engine that uses current and historical data to easily compare an aircraft’s present and past states. It builds on the concept behind Boeing’s signature Turn Time iPad application, which features 3D models of different aircraft and displays the locations of defects and dents that have accumulated over time.

“Our high-level idea was to take that app, and replace that view of the 3D model of these dents and buckles with an augmented reality view — where you could take the iPad, hold it up, and look through the camera at the actual plane,” explained Jack Hsu, project lead, Boeing Vancouver.

“Then on the plane that you’re seeing through the camera, you would also see virtual dots representing the locations of the dents and buckles directly on the aircraft,” he continued. “You could turn on different color layers; for example, ‘green dots’ could be displayed to show all existing defects. And if you spot new damage, you could touch the iPad and indicate that there’s new damage [in a particular area], and it would be represented as a red dot.

“You can also pick existing damage, by touching the iPad, and then get metadata associated with the damage — such as: when was it recorded? Is there a picture of it? What’s the size of the defect?

“That’s what we’re trying to create.”

Boeing partnered with B.C.’s Simon Fraser University and Unity Canada to conduct research — each partner bringing something unique to the table. While Boeing managed the first stage of the project and provided the aviation industry and Apple iPad expertise, Unity — a world leader in video game rendering engine development — offered its augmented reality expertise and worked on image generation. Simon Fraser University, the research partner, provided expertise on the machine learning algorithm, which all three partners contributed to.

Rather than developing a dedicated app, Boeing and its partners are looking to build the augmented reality technology as a component that can be embedded into different applications, like Turn Time, and added as a feature to a particular product. “Which is basically what we did on this project,” said Hsu.

“The idea is that there would be a piece of software — an app that resides on an augmented reality device [like an iPad],” he continued. “The app would implement this augmented reality view of the world. And encapsulated within that piece of software is a machine learning and artificial intelligence model that you develop outside of the app.”

The technology would be packaged in such a way that it could be used for inspections on aircraft, as well as other large transportation vehicles/machinery in industries outside of aviation.

Hsu emphasized that the project partners “took a machine learning approach to solving our use-case.”

He added: “What we’re trying to do is have [aircraft technicians] point the iPad at the aircraft, and automatically through the image itself [the software would] recognize the aircraft’s orientation.”

In order to achieve this, an artificial intelligence machine learning algorithm must be utilized. Then, “you need to train the algorithm to the point where you can point any iPad at a plane and it can automatically calculate its X-Y-Z position and orientation,” said Hsu.

“Once you do that, then you can start placing these virtual objects into the viewing space, which in our case are dots”— that indicate previous repair/inspection locations.

The challenge is, the algorithm would require hundreds of thousands of images of planes from different viewing angles, lighting conditions, liveries, background hangars, etc., in order to gain this position determination ability.

“And if you’re actually trying to collect those images with real aircraft, in actual hangars, in different conditions… it’s just not feasible,” said Hsu.

This is where Boeing and its partners came up with what Hsu referred to as a “leading-edge” solution: using synthetic data to do that training of the machine learning model. “Synthetic data means you generate pictures — these are not real pictures — but you actually get the system to generate pictures at all sorts of different viewing angles, lighting conditions, etc.,” he explained.

Hsu said the first stage of the research project — which began in January 2020, prior to pandemic closures in North America — had “very promising” results. The initial focus was on displaying repair location on aircraft surfaces, as well as testing the viability of the augmented reality technology. Next up, Hsu said the team is hoping to use the machine learning algorithm to create a system that can look under the skin of the aircraft at other parts/components.

The team is also hoping to look at maintenance “work guidance” in the future, such as virtually disassembling an object. For example, “to be able to have a virtual component anchored on top of the image that you’re seeing of the real component, and then see virtual screws getting removed,” explained Hsu. “You could animate that whole sequence of what you need to do to repair the component. So we’re excited about exploring that avenue once we get the anchoring problem solved.”

With the first stage of the research being complete, Hsu estimates it may take another year to put this augmented reality capability into production. Boeing is currently putting a proposal together to continue the research with the same partners for the next stage of the project.